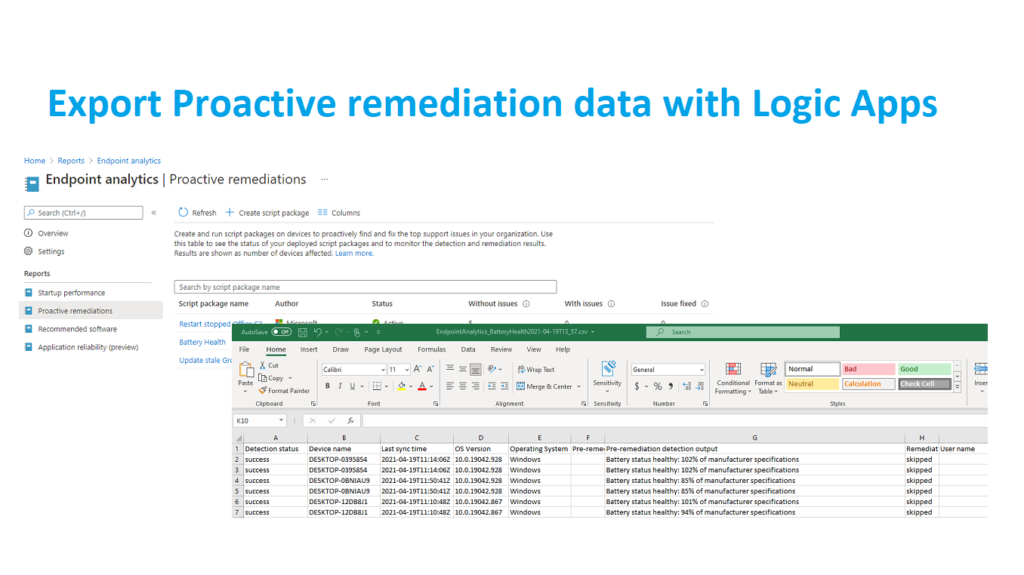

Since last year we have the opportunity to schedule PowerShell scripts in Microsoft Intune as part of the Endpoint Analytics feature, the so-called Proactive remediation scripts. For every scheduled script we have a Device status tab with details of the status of the devices and the script, but we still don’t have an option to export this helpful data (to a CSV file). With the help of Microsoft Graph, we can export this data manually. With the help of Logic Apps, we can even schedule this export job so we can process the data on a regular basis.

In this example, I show how the script status is exported from the Battery Replacement Proactive Remediation script, which is written by Maurice Daly.

The solution in short

There are not many actions involved in this flow. With the most simple flow, we can use an HTTP action, to query the Microsoft Graph for the data we need. We need a Select action, to grab items from the output of the HTTP action for every device. This is followed by a Create CSV Table action, to process the data so we have it in CSV format. The last action is a SharePoint Create file action, to store our data in a CSV file in a SharePoint folder.

And to secure the HTTP action I use an Azure Key Vault action.

In a separate section of this post, I also show how we can use this flow to export the status, for not just one script, but for all scripts at once.

Requirements

To run this flow, we have some requirements to met.

First of all, we need to have the appropriate permissions to run the Graph query. The HTTP action we use in the flow uses an Azure App Registration to authenticate to MS Graph. On this App Registration, we set the required permission we need for our Graph queries.

The minimum permissions are DeviceManagementConfiguration.Read.All.

In this previous blog post I described in detail how to create an App Registration.

To secure the HTTP actions I use an Azure Key Vault, which is described in this previous post.

And to store the CSV file on SharePoint. our service account needs permissions to create a file.

Setup the Logic Apps

Sign-in to the Azure portal and open the Logic Apps service. Here create a new, blank Logic App.

In the search box for connections and triggers search for Schedule. Select Schedule and select Recurrence which functions as our flow trigger.

Make your choice for the recurrence schedule.

The first action we add is an Azure Key Vault action to retrieve the secret for authentication of the upcoming HTTP action. Search for Azure Key Vault and select Get secret.

Enter at least the Vault name and click sign-in.

Enter the name of the secret.

Next, we will add an HTTP action. But to run this action, we need to have the ID of the Proactive Remediation script, as this is used in the Graph URI to query the status of the devices for this script.

There are several ways to look up the ID, via the Intune portal by using the developer tools.

Or by running this query in Graph Explorer which shows all the Device health scripts with the corresponding IDs.

https://graph.microsoft.com/beta/deviceManagement/deviceHealthScriptsAdd the HTTP action in the Logic Apps flow.

As Method select GET.

As URI enter:

https://graph.microsoft.com/beta/deviceManagement/deviceHealthScripts/[ID]/deviceRunStates?&$expand=managedDevice&$filter=Replace [ID] with the ID you found by running the previous query.

Check Authentication and choose Active Directory OAuth as Authentication type. Enter the Tenant, Client ID (from the app registration) and Audience.

And make sure to select the Key Vault secret value from the Key Vault action, which you can find on the right as Dynamic content.

When you’re running the GET query in a large environment, you notice the output of this action is limited to 1000 items. You can change the pagination of this HTTP action in the settings to set a higher threshold for this action. Click on the three dots in the upper right corner and choose Settings.

Switch On pagination, set a threshold number and click Done.

Next, we add a Select action, which is a Data Operations action.

With this action, we select the items from the HTTP output which we want to add to our CSV file in the next action.

In the From box, we need to make sure the output from the previous action is added. But we only need values from the value array.

Below is an example of the HTTP action output as a reference.

We use an expression to get the data from the value array. Paste below expression in the From field. Make sure to replace HTTP_ACTION_NAME with the name of your HTTP action, but with underscores instead of spaces.

body('HTTP_ACTION_NAME')?['value']Next enter all the key names on the left. You can choose the key names yourself.

On the right, we need the values (items) from the previous HTTP action.

We need the items that are located in the first object of the HTTP output (after the first bracket). But we also need to grab items of the second (managedDevice) object (after the second bracket).

To grab items which are located in the first object we use:

item()?['ITEMNAME']For example to get the detectionState:

item()?['detectionState']If the item is located in the second opbject we use:

item()?['managedDevice']?['ITEMNAME']For example to get the device name:

item()?['managedDevice']?['deviceName']This are the expression I use in this example:

item()?['managedDevice']?['deviceName']

item()?['managedDevice']?['userPrincipalName']

item()?['detectionState']

item()?['remediationState']

item()?['managedDevice']?['operatingSystem']

item()?['managedDevice']?['osVersion']

item()?['lastSyncDateTime']

item()?['preRemediationDetectionScriptError']

item()?['preRemediationDetectionScriptOutput']Enter all the expression one by one.

This is the complete Select action

Again we use a Data Operations action, this time the Create CSV table action.

In the From field add the Output from the Select item. Make sure Columns is set to Automatic.

The last action is a SharePoint action. Add the Create file action to the flow.

Select the Site address from the SharePoint site where you want to create the CSV file. Or choose Enter custom value as your Site address isn’t listed.

Choose your folder via the folder icon. And enter a File name.

As the flow isn’t able to overwrite an existing file, we should use a variable in the file name. I enter below expression to use the current date and time in the file name.

formatDateTime(utcNow(),'yyyy-MM-ddTHH:mm')And as File content, we add the output from the Create CSV table action.

This is our whole flow, to export the Proactive Remediation data into a CSV file and store the file on SharePoint.

Optional config to export all scripts at once

Above I showed how we can set up the flow at a minimum, to only export the status of one of the Proactive Remediation scripts, but it’s also possible to export the data for all scripts using one flow. But keep in mind, if you’re using this is a large environment the flow can run pretty long and you might hit some thresholds.

We have to add two additional actions to our flow. An additional HTTP action, to query all the Proactive Remediation scripts. And a Parse JSON action, to parse the output of the HTTP action, so we can use the data in the original HTTP action. Our flow would like below with the additional steps.

Add a new HTTP action, direct after the Get secret action.

As Method select GET.

As URI enter:

https://graph.microsoft.com/beta/deviceManagement/deviceHealthScriptsDon’t forget to fill in the authentication details.

Next add a Parse JSON action to the flow.

As content we use the Body from the new HTTP action.

We fill the schema by using sample payload. Run the GET Graph query from the previous action in Graph Explorer. Copy the output. In the Parse JSON action click Use sample payload to generate the schema. Paste the output from Graph Explorer in the text box, which will generate the schema.

Open the original HTTP action and remove the ID in the URI box. We need to replace that with a variable from the Parse JSON action. Search for ID on the Dynamic content tab.

The ID is now replaced with the variable from the Parse JSON action. And the HTTP action is automatically placed in a For each action. This will make sure that all the scripts are handled by the flow.

Move the other actions also into the For each action. It might be necessary to temporary remove the Output variable from the actions to move them. Don’t forget to place these back.

To make sure every CSV file is easy to recognize, I used the displayName variable from the Parse JSON file in the File Name.

The end-result

The end-result is a Logic Apps flow, which runs based on a predefined schedule. It grabs the Proactive Remediation result from Graph, converts the data to a CSV table and saves it to a file on SharePoint.

If you used the additional actions to export all scripts, you get multiple CSV files.

That’s it for this automation post. Thanks for reading!